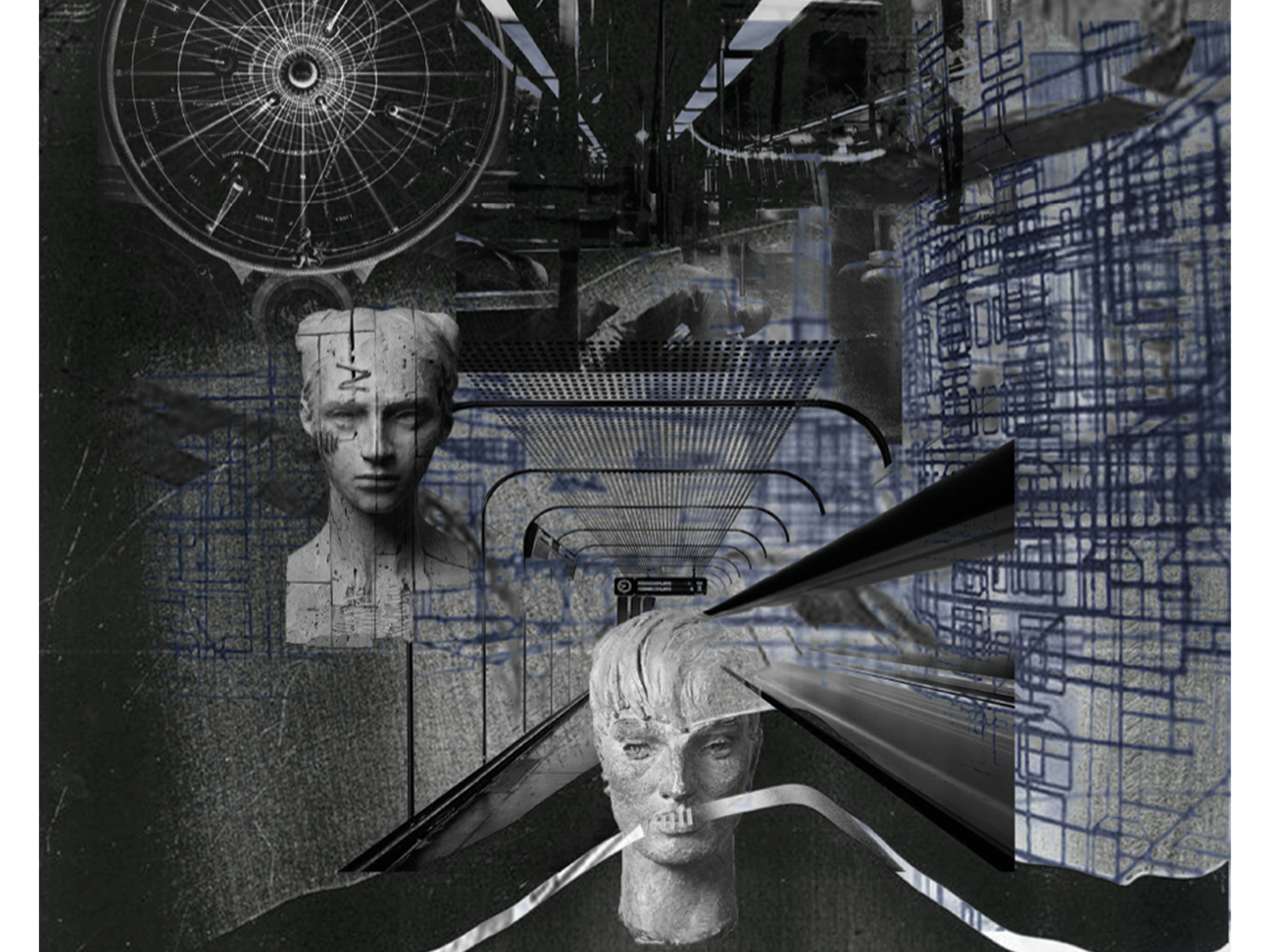

The primary objective of this project is to explore the synergy between AI and visual artistry. As the music plays, the objects within the animation fluidly respond, mirroring the rhythmic patterns, melodies, and dynamics of the auditory composition. This synchronization between sound and visuals creates a mesmerizing and immersive experience for the viewer.

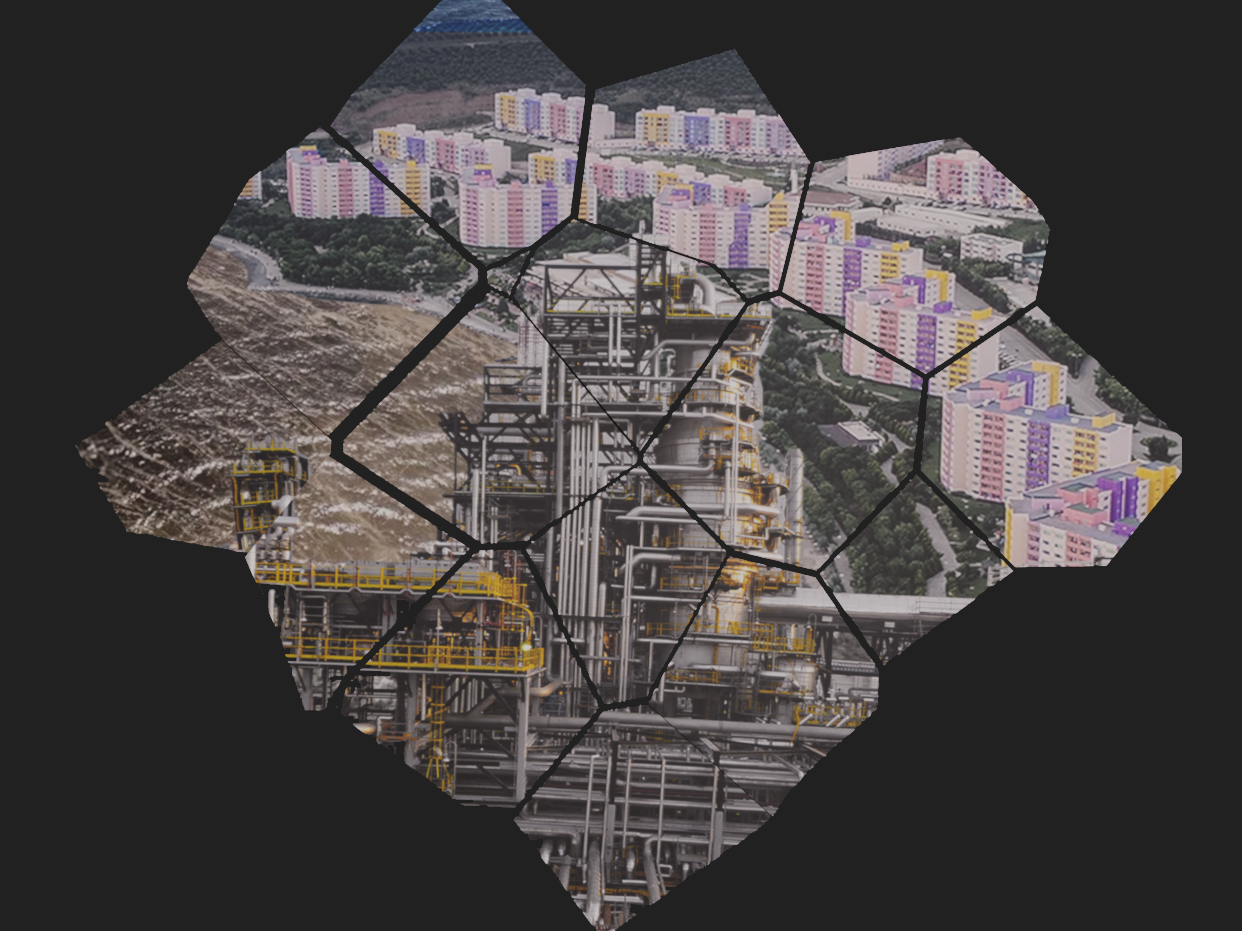

However, the true magic lies in the ability of Stable Diffusion to analyze and interpret the evolving animation in real-time. By processing the visual input and employing sophisticated algorithms, Stable Diffusion generates a diverse array of stunning visuals, utilizing the initial animation as a reference point. Through the interplay of the artist’s commands and the AI’s creative output, an ever-evolving sequence of captivating imagery emerges.

As the tempo of the music fluctuates, the visuals seamlessly adapt and transform, presenting a dynamic progression of artistic expressions. Each frame unveils a unique composition, revealing intricate details and captivating transitions that unfold in harmony with the auditory elements.